I recently watched the first-ever LlamaCon, Meta’s brand – new AI developer conference. It was clearly geared toward developers and engineers, but even from a broader tech or business perspective, it had some fascinating updates.

So here’s a friendly, digestible recap of what stood out to me, especially from the first 30 minutes of the event, before the more technical fireside chat kicked in.

1. Meta Is Getting Serious About Making AI Easier to Use

One of the biggest announcements was the Llama API , basically, a much easier way for developers to plug Meta’s AI models (called Llama) into websites, apps, or products.

Even if you’re not a developer, think of it like this: Meta wants AI to be as plug-and-play as possible. Less complicated setup, faster integration, and more flexibility. It’s their way of saying, “We want more people building with our models.”

2. The Llama 4 Model Family Is Here Built for Different Needs

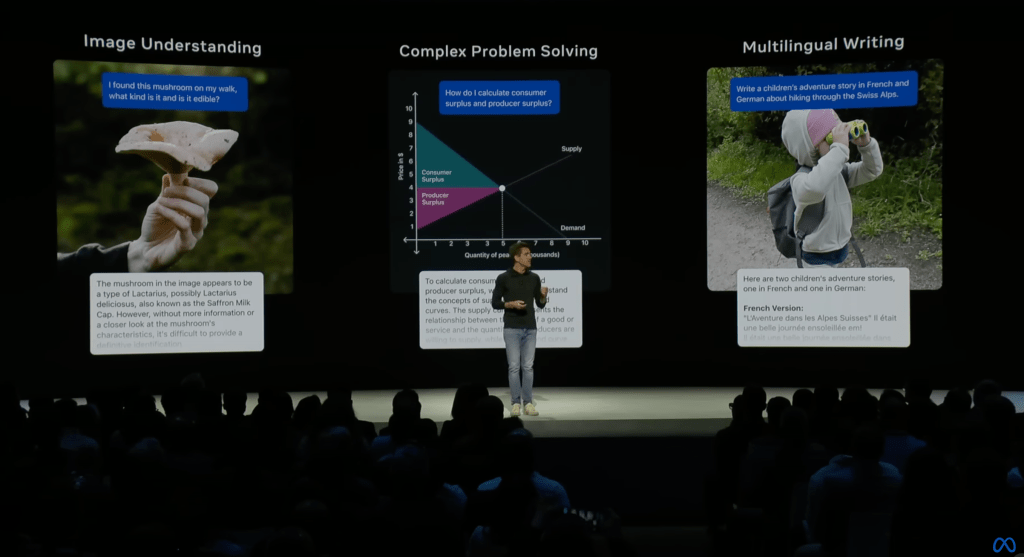

Meta introduced three new Llama 4 models, each designed for different types of users:

- Llama 4 Scout – a lightweight, budget-friendly model with a huge memory span (perfect for longer content or research-type tasks)

- Llama 4 Maverick – a smarter model that works across text, images, and even code

- Llama 4 Behemoth – still training, but it’s their biggest and most powerful one yet, aimed at science, math, and big enterprise tasks

In short, whether you’re a solo creator or a large company, there’s now a Llama model built for your use case.

3. Meta Doubled Down on Safety (and That’s a Good Thing)

It was refreshing to see how much Meta emphasized AI safety. They introduced new tools to help prevent things like harmful outputs, prompt injection attacks (where people trick AI into doing bad things), and privacy risks.

Even if you’re not building AI systems yourself, this matters, because it means Meta is thinking ahead about how to use AI responsibly, not just powerfully.

4. Meta AI Is Coming to You – Through an App and… Glasses?

This might’ve been the most consumer-relevant part of the event:

Meta officially launched the Meta AI assistant as a mobile app — and also announced it’s now integrated into the Ray-Ban Meta smart glasses.

Yes, you can literally look around and ask your glasses questions like:

- “What am I looking at?”

- “Translate this sign”

- “Tell me about this building”

The assistant uses the Llama model under the hood, meaning the same technology discussed at the dev conference is also powering real, everyday use cases. This was a nice reminder that AI isn’t just for coders — it’s being baked into the things we wear, carry, and interact with.

5. Meta Is Betting on Open Source

Meta made a strong point about keeping their AI models open source, meaning developers (and even curious hobbyists) can use, test, and adapt their models freely.

It’s a very different strategy compared to competitors who keep everything locked behind APIs and subscriptions. And if you care about the future of accessible technology, that’s a pretty exciting stance.

Final Thought

Even though LlamaCon was geared toward engineers, it gave a clear signal to the rest of us: Meta’s not just trying to “catch up” in AI – they’re building a full ecosystem.

From easier tools for developers, to wearable smart glasses for consumers, to models that work across different media and languages — this isn’t just about competing with ChatGPT. It’s about shaping what everyday AI use looks like in the near future.

And yes, there really is a “little llama.” You just won’t find it at a petting zoo.

Leave a comment